Babak Rahmani

I’m Babak Rahmani, a Researcher at Microsoft Research (Cambridge, UK) and a Visiting Researcher (sabbatical) at BethgeLab (Tübingen ELLIS & AI Center). My research sits at the intersection of model architecture, efficient training/inference, and agentic systems: making foundation models more reliable on long-horizon tasks (e.g., code generation/verification via execution state tracking) and more efficient through recurrent/implicit computation and systems-aware design. I work end-to-end—from problem framing and dataset/trace construction to large-scale training (7B-class; 200B+ token runs), evaluation, and post-training (alignment/RL)—with an engineering bias for reproducibility and clean interfaces. I did my PhD in Electrical Engineering at EPFL (Lausanne, Switzerland), supervised by Christophe Moser and Demetri Psaltis.

News

- Feb 2026: Blog post: Debugging CWMs: Where Traces Help (and Why They Fail)

- Sep 2025: Joined BethgeLab (Tübingen ELLIS & AI Center) as a Visiting Researcher (sabbatical).

- Sep 2025: Two Nature papers (“AOC” and “Training Deep Physical Neural Networks”) published on the same day.

- Apr 2025: ASAP Seminar talk: “Implicit Language Models are RNNs” — video, paper, slides

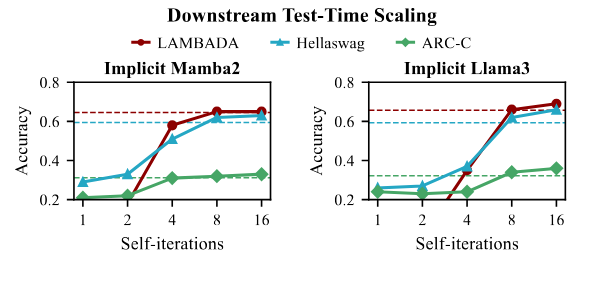

- 2025: ICML Spotlight: “Implicit Language Models are RNNs: Balancing Parallelization and Expressivity”.

- 2024: Assistant Program Chair, NeurIPS 2024.

- 2023–2024: Co-organizer, NeurIPS workshop MLNCP (Machine Learning with New Compute Paradigms).

- 2023: Marie Skłodowska-Curie Fellowship (BiTFormer) — score 100/100 (≈ 9% success rate).

- Nov 2023: “Backpropagation-free Training of Deep Physical Neural Networks” accepted to Science.

- Sep 2022: Paper accepted to NeurIPS 2022.

- Jul 2022: Started at Microsoft Research (Cambridge, UK).

- Mar 2022: PhD completed at EPFL.

- Oct 2021: Paper accepted to NeurIPS ML4PhysicalSciences workshop.

- Jul 2020: Paper accepted to Nature Machine Intelligence.

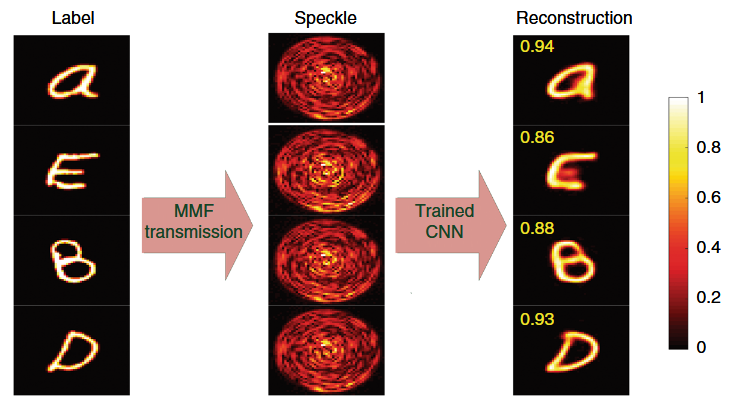

- Dec 2019: “Multimode optical fiber transmission with a deep learning network” recognized as a top-downloaded paper in Nature Light: Science & Applications.

Selected publications

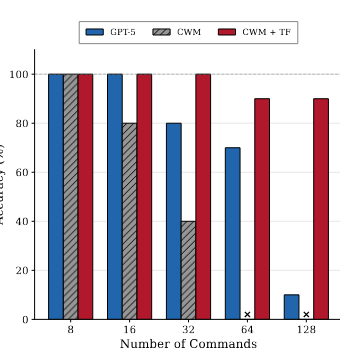

Debugging code world models Internal page

arXiv (2026)

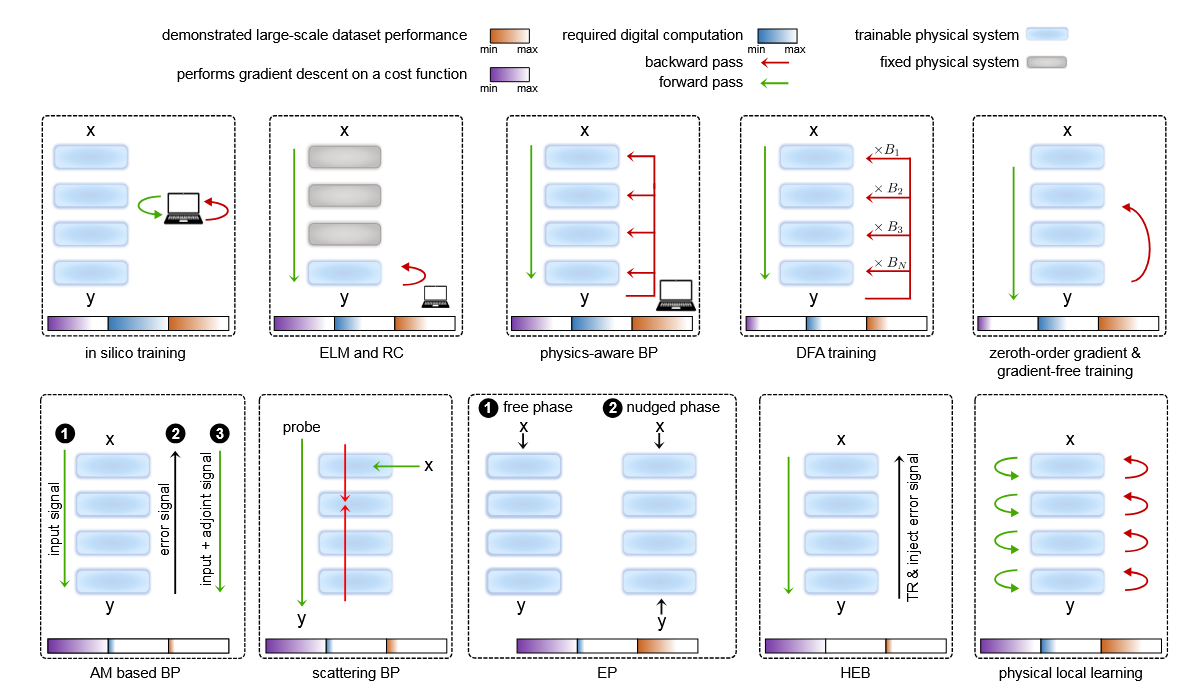

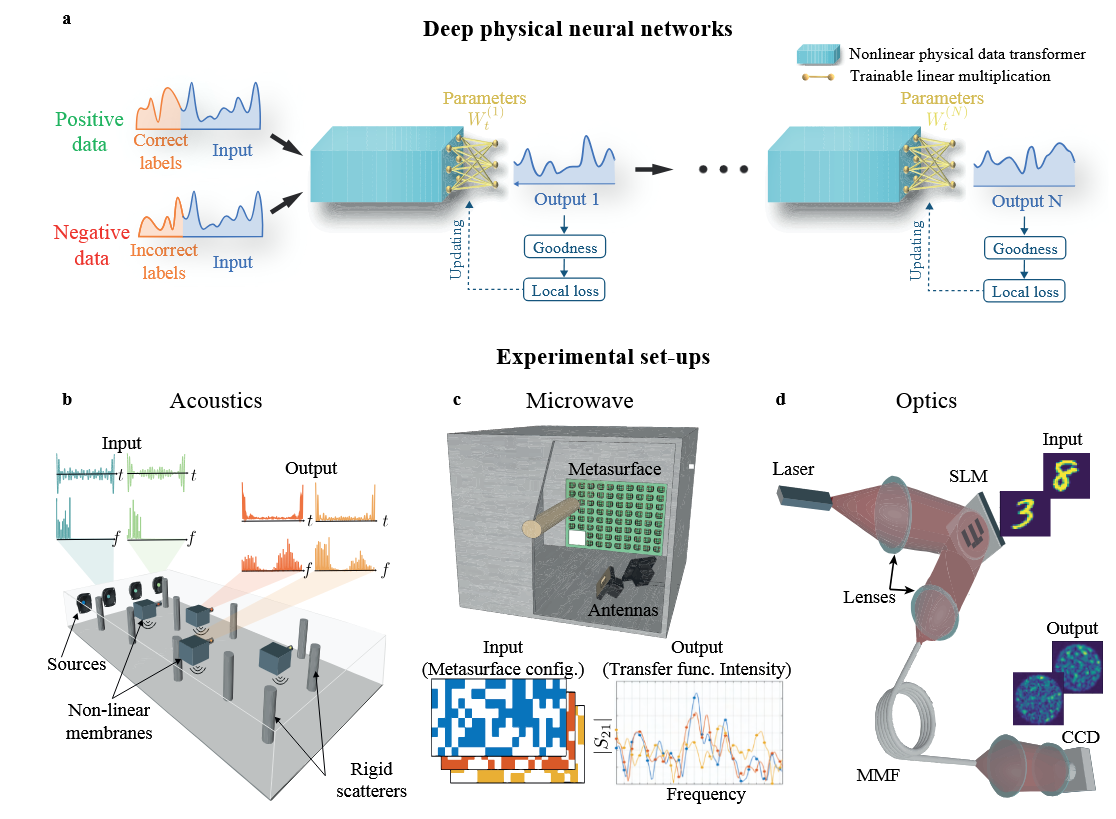

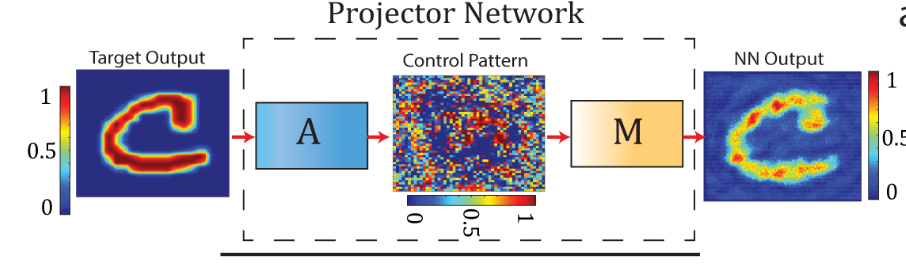

Training Deep Physical Neural Networks Internal page

Nature (2025)

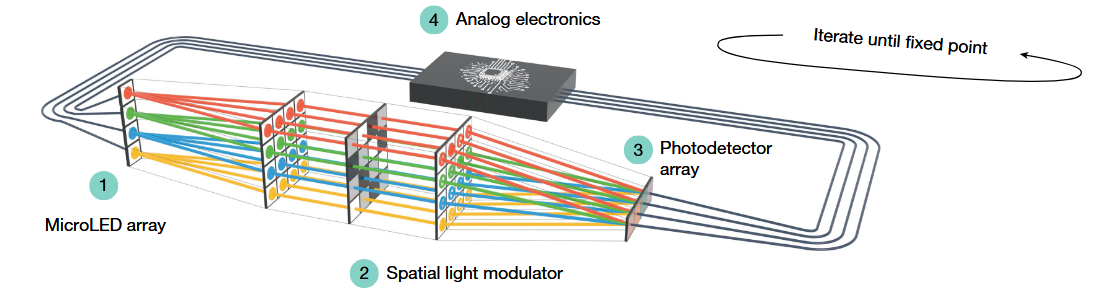

AOC Internal page

Nature (2025)

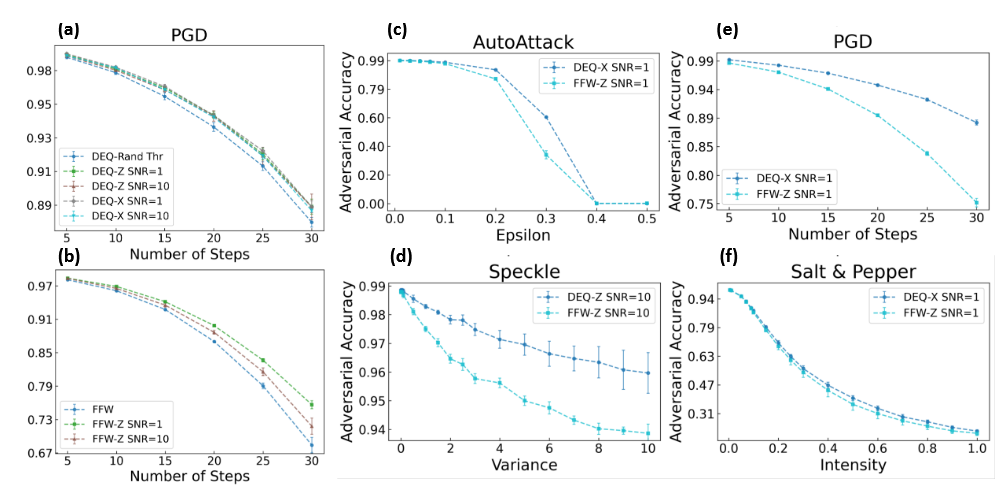

Regularizing the Infinite: Improved Generalization Performance with Deep Equilibrium Models Internal page

NeurIPS 2024 Workshop: Machine Learning with New Compute Paradigms (MLNCP) (2024)

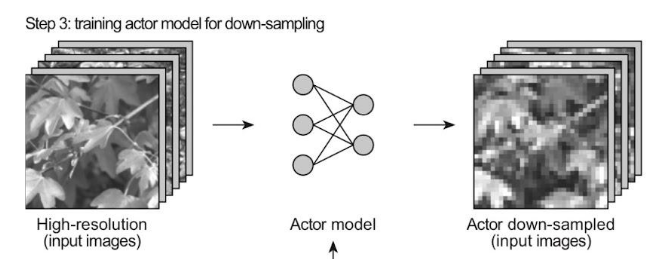

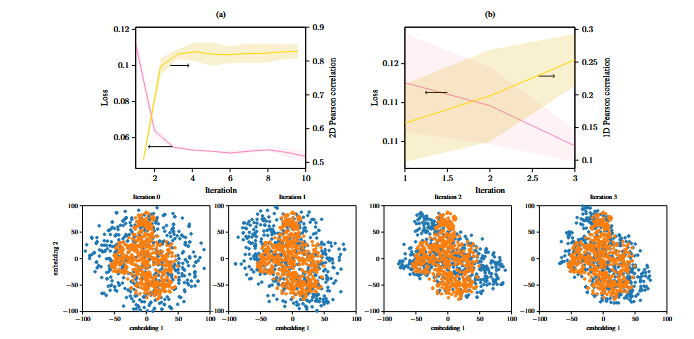

An actor-model framework for visual sensory encoding Internal page

Nature Communications (2024)

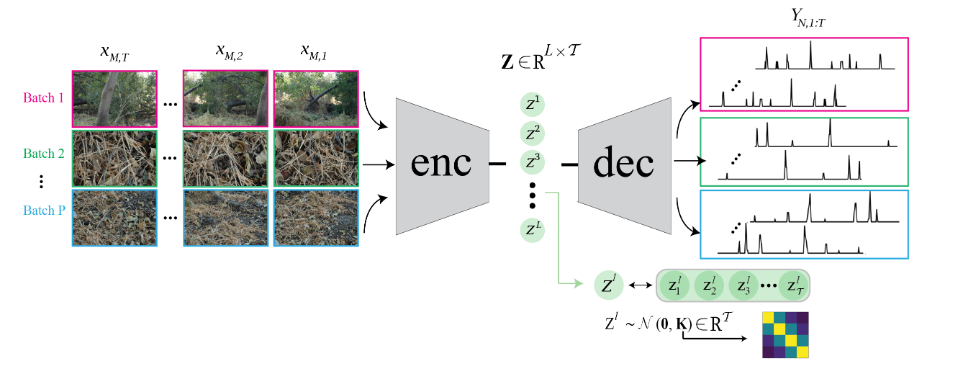

Natural image synthesis for the retina with variational information bottleneck representation Internal page

NeurIPS Proceedings (2022)

Variational framework for partially-measured physical system control Internal page

Fourth Workshop on Machine Learning and the Physical Sciences, NeurIPS21 (2021)

Multimode optical fiber transmission with a deep learning network Internal page

Nature Light Science and Application (2018)